Ray Tune

The hyperparameter optimization page on Wikipedia is full of interesting links. It will take me some time to explore them, as I am not satisfied of just having a basic knowledge on grid and random search.

I have followed randomly one of the links in open source softwares for hyperparameters optimization and I have reached Ray Tune. I was expecting some obscure tool but instead I have discovered a superb site full of documentation and examples.

Quoting them, Ray is defined as

Ray is a unified framework for scaling AI and Python applications. Ray consists of a core distributed runtime and a toolkit of libraries (Ray AIR) for simplifying ML compute

Today’s ML workloads are increasingly compute-intensive. As convenient as they are, single-node development environments such as your laptop cannot scale to meet these demands.

Ray is a unified way to scale Python and AI applications from a laptop to a cluster.

With Ray, you can seamlessly scale the same code from a laptop to a cluster. Ray is designed to be general-purpose, meaning that it can performantly run any kind of workload. If your application is written in Python, you can scale it with Ray, no other infrastructure required.

https://docs.ray.io/en/latest/index.html

I have just looked a bit into the Tune module:

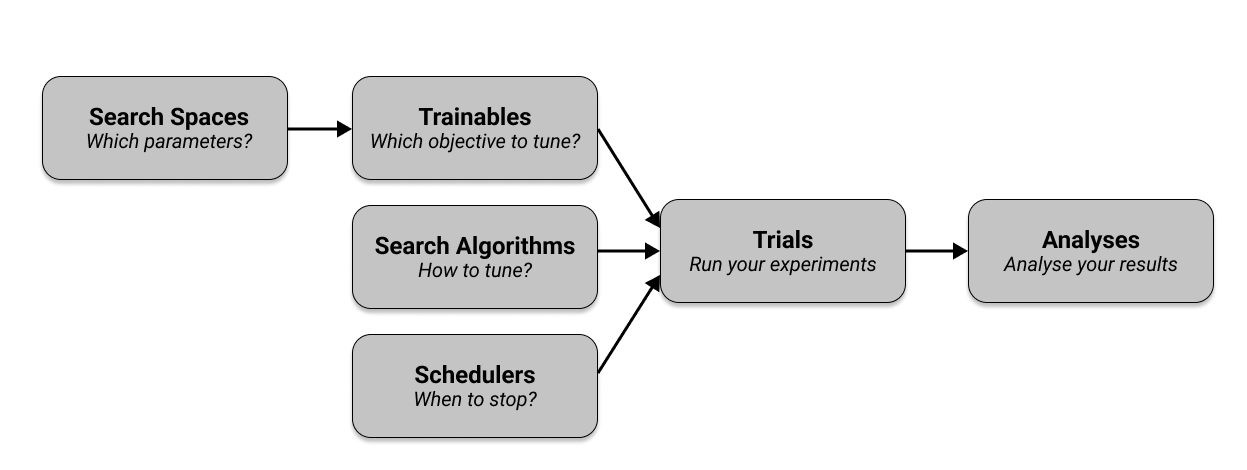

With few python lines you can define, for each of your hyperparameters, the acceptable range. You can then configure a search algorithm to explore the combinations (not just random and grid) and also schedulers to say when it is ok to stop. You pass to the framework the function you want to optimize, and it will do many tests searching for the best parameter combination. Looking at the quickstart example, I imagine you can define a set of experiments like this:

# Define a search space.

search_space = {

"dropout": tune.grid_search([0.001, 0.01, 0.1]),

"optimizer": tune.choice([ 'adagrad', 'adadelta', 'sgd']),

}

The quickstart is available on this page https://docs.ray.io/en/latest/tune/index.html , and you can see examples for Keras and PyTorch.

Already this sounds good, but looking a bit around I have found this page: Tune distributed experiments. Ray provides commands to deploy a cluster where you run in parralel your experiments, collect the results, and handles also failures. There are examples for AWS.

Seems really awsome.

Leave a comment